Probability Markov Chains Queues And Simulation Ebookers

- Probability Markov Chains Queues And Simulation Pdf

- Probability Markov Chains Queues And Simulation Ebookers 1

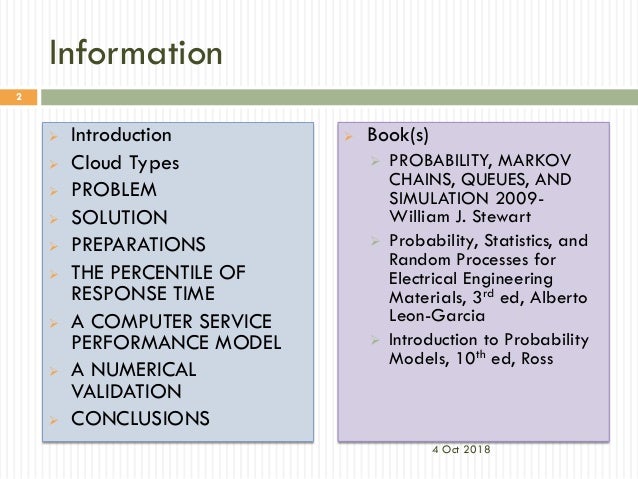

'Probability, Markov Chains, Queues, and Simulation' provides a modern and authoritative treatment of the mathematical processes that underlie performance modeling. The detailed explanations of mathematical derivations and numerous illustrative examples make this textbook readily accessible to graduate and advanced undergraduate students taking courses in which stochastic processes play a fundamental role. The textbook is relevant to a wide variety of fields, including computer science, engineering, operations research, statistics, and mathematics.

The textbook looks at the fundamentals of probability theory, from the basic concepts of set-based probability, through probability distributions, to bounds, limit theorems, and the laws of large numbers. Discrete and continuous-time Markov chains are analyzed from a theoretical and computational point of view. Topics include the Chapman-Kolmogorov equations; irreducibility; the potential, fundamental, and reachability matrices; random walk problems; reversibility; renewal processes; and the numerical computation of stationary and transient distributions. The M/M/1 queue and its extensions to more general birth-death processes are analyzed in detail, as are queues with phase-type arrival and service processes.

The M/G/1 and G/M/1 queues are solved using embedded Markov chains; the busy period, residual service time, and priority scheduling are treated. Open and closed queueing networks are analyzed. The final part of the book addresses the mathematical basis of simulation.

Each chapter of the textbook concludes with an extensive set of exercises. An instructor's solution manual, in which all exercises are completely worked out, is also available (to professors only). Numerous examples illuminate the mathematical theories. Carefully detailed explanations of mathematical derivations guarantee a valuable pedagogical approach.

Each chapter concludes with an extensive set of exercises. For professors a supplementary Solutions Manual is available for this book. It is restricted to teachers using the text in courses.

A diagram representing a two-state Markov process, with the states labelled E and A. Each number represents the probability of the Markov process changing from one state to another state, with the direction indicated by the arrow. For example, if the Markov process is in state A, then the probability it changes to state E is 0.4, while the probability it remains in state A is 0.6.A Markov chain is a describing a of possible events in which the probability of each event depends only on the state attained in the previous event. In, it is known as a Markov process.

It is named after the mathematician.Markov chains have many applications as of real-world processes, such as studying in, queues or lines of customers arriving at an airport, currency and animal population dynamics.Markov processes are the basis for general stochastic simulation methods known as, which are used for simulating sampling from complex probability distributions, and have found application in and.The adjective Markovian is used to describe something that is related to a Markov process. Russian mathematician Definition A Markov process is a that satisfies the (sometimes characterized as '). In simpler terms, a Markov process is a process for which one can make predictions for its future based solely on its present state just as well as one could knowing the process's full history. In other words, on the present state of the system, its future and past states are.A Markov chain is a type of Markov process that has either a discrete or a discrete index set (often representing time), but the precise definition of a Markov chain varies. For example, it is common to define a Markov chain as a Markov process in either with a countable state space (thus regardless of the nature of time), but it is also common to define a Markov chain as having discrete time in either countable or continuous state space (thus regardless of the state space). Types of Markov chains The system's and time parameter index need to be specified. The following table gives an overview of the different instances of Markov processes for different levels of state space generality and for:Countable state spaceContinuous or general state spaceDiscrete-time(discrete-time) Markov chain on a countable or finite state space(for example, )Continuous-timeContinuous-time Markov process or Markov jump processAny with the Markov property (for example, the )Note that there is no definitive agreement in the literature on the use of some of the terms that signify special cases of Markov processes.

Usually the term 'Markov chain' is reserved for a process with a discrete set of times, that is, a discrete-time Markov chain (DTMC), but a few authors use the term 'Markov process' to refer to a continuous-time Markov chain (CTMC) without explicit mention. In addition, there are other extensions of Markov processes that are referred to as such but do not necessarily fall within any of these four categories (see ). Moreover, the time index need not necessarily be real-valued; like with the state space, there are conceivable processes that move through index sets with other mathematical constructs. Notice that the general state space continuous-time Markov chain is general to such a degree that it has no designated term.While the time parameter is usually discrete, the of a Markov chain does not have any generally agreed-on restrictions: the term may refer to a process on an arbitrary state space. However, many applications of Markov chains employ finite or state spaces, which have a more straightforward statistical analysis. Besides time-index and state-space parameters, there are many other variations, extensions and generalizations (see ). For simplicity, most of this article concentrates on the discrete-time, discrete state-space case, unless mentioned otherwise.Transitions The changes of state of the system are called transitions.

The probabilities associated with various state changes are called transition probabilities. The process is characterized by a state space, a describing the probabilities of particular transitions, and an initial state (or initial distribution) across the state space. By convention, we assume all possible states and transitions have been included in the definition of the process, so there is always a next state, and the process does not terminate.A discrete-time random process involves a system which is in a certain state at each step, with the state changing randomly between steps. The steps are often thought of as moments in time, but they can equally well refer to physical distance or any other discrete measurement. Formally, the steps are the or, and the random process is a mapping of these to states.

The Markov property states that the for the system at the next step (and in fact at all future steps) depends only on the current state of the system, and not additionally on the state of the system at previous steps.Since the system changes randomly, it is generally impossible to predict with certainty the state of a Markov chain at a given point in the future. However, the statistical properties of the system's future can be predicted. In many applications, it is these statistical properties that are important.History Markov studied Markov processes in the early 20th century, publishing his first paper on the topic in 1906. Markov processes in continuous time were discovered long before 's work in the early 20th century in the form of the. Markov was interested in studying an extension of independent random sequences, motivated by a disagreement with who claimed independence was necessary for the to hold. In his first paper on Markov chains, published in 1906, Markov showed that under certain conditions the average outcomes of the Markov chain would converge to a fixed vector of values, so proving a weak law of large numbers without the independence assumption, which had been commonly regarded as a requirement for such mathematical laws to hold.

Markov later used Markov chains to study the distribution of vowels in, written by, and proved a for such chains.In 1912 studied Markov chains on with an aim to study card shuffling. Other early uses of Markov chains include a diffusion model, introduced by and in 1907, and a branching process, introduced by and in 1873, preceding the work of Markov.

After the work of Galton and Watson, it was later revealed that their branching process had been independently discovered and studied around three decades earlier. Starting in 1928, became interested in Markov chains, eventually resulting in him publishing in 1938 a detailed study on Markov chains.developed in a 1931 paper a large part of the early theory of continuous-time Markov processes. Kolmogorov was partly inspired by Louis Bachelier's 1900 work on fluctuations in the stock market as well as 's work on Einstein's model of Brownian movement. He introduced and studied a particular set of Markov processes known as diffusion processes, where he derived a set of differential equations describing the processes.

Independent of Kolmogorov's work, derived in a 1928 paper an equation, now called the, in a less mathematically rigorous way than Kolmogorov, while studying Brownian movement. The differential equations are now called the Kolmogorov equations or the Kolmogorov–Chapman equations. Other mathematicians who contributed significantly to the foundations of Markov processes include, starting in 1930s, and then later, starting in the 1950s. Examples. See also:A continuous-time Markov chain ( X t) t ≥ 0 is defined by a finite or countable state space S, a Q with dimensions equal to that of the state space and initial probability distribution defined on the state space. For i ≠ j, the elements q ij are non-negative and describe the rate of the process transitions from state i to state j.

The elements q ii are chosen such that each row of the transition rate matrix sums to zero, while the row-sums of a probability transition matrix in a (discrete) Markov chain are all equal to one.There are three equivalent definitions of the process. Infinitesimal definition. Main article:A is a special case of a Markov chain where the transition probability matrix has identical rows, which means that the next state is even independent of the current state (in addition to being independent of the past states). A Bernoulli scheme with only two possible states is known as a.Applications Research has reported the application and usefulness of Markov chains in a wide range of topics such as physics, chemistry, biology, medicine, music, game theory and sports.Physics Markovian systems appear extensively in and, whenever probabilities are used to represent unknown or unmodelled details of the system, if it can be assumed that the dynamics are time-invariant, and that no relevant history need be considered which is not already included in the state description.

For example, a thermodynamic state operates under a probability distribution that is difficult or expensive to acquire. Therefore, Markov Chain Monte Carlo method can be used to draw samples randomly from a black-box to approximate the probability distribution of attributes over a range of objects.The paths, in the path integral formulation of quantum mechanics, are Markov chains.Markov chains are used in simulations. Chemistry. The enzyme (E) binds a substrate (S) and produces a product (P).

Each reaction is a state transition in a Markov chain.A reaction network is a chemical system involving multiple reactions and chemical species. The simplest stochastic models of such networks treat the system as a continuous time Markov chain with the state being the number of molecules of each species and with reactions modeled as possible transitions of the chain. Markov chains and continuous-time Markov processes are useful in chemistry when physical systems closely approximate the Markov property. For example, imagine a large number n of molecules in solution in state A, each of which can undergo a chemical reaction to state B with a certain average rate. Perhaps the molecule is an enzyme, and the states refer to how it is folded. The state of any single enzyme follows a Markov chain, and since the molecules are essentially independent of each other, the number of molecules in state A or B at a time is n times the probability a given molecule is in that state.The classical model of enzyme activity, can be viewed as a Markov chain, where at each time step the reaction proceeds in some direction. While Michaelis-Menten is fairly straightforward, far more complicated reaction networks can also be modeled with Markov chains.An algorithm based on a Markov chain was also used to focus the fragment-based growth of chemicals towards a desired class of compounds such as drugs or natural products.

As a molecule is grown, a fragment is selected from the nascent molecule as the 'current' state. It is not aware of its past (that is, it is not aware of what is already bonded to it).

It then transitions to the next state when a fragment is attached to it. The transition probabilities are trained on databases of authentic classes of compounds.Also, the growth (and composition) of may be modeled using Markov chains.

Based on the reactivity ratios of the monomers that make up the growing polymer chain, the chain's composition may be calculated (for example, whether monomers tend to add in alternating fashion or in long runs of the same monomer). Due to, second-order Markov effects may also play a role in the growth of some polymer chains.Similarly, it has been suggested that the crystallization and growth of some epitaxial oxide materials can be accurately described by Markov chains.

Biology Markov chains are used in various areas of biology. Main article:Markov chains are the basis for the analytical treatment of queues.

Initiated the subject in 1917. This makes them critical for optimizing the performance of telecommunications networks, where messages must often compete for limited resources (such as bandwidth).Numerous queueing models use continuous-time Markov chains. For example, an is a CTMC on the non-negative integers where upward transitions from i to i + 1 occur at rate λ according to a and describe job arrivals, while transitions from i to i – 1 (for i 1) occur at rate μ (job service times are exponentially distributed) and describe completed services (departures) from the queue.Internet applications.

A second-order Markov chain can be introduced by considering the current state and also the previous state, as indicated in the second table. Higher, nth-order chains tend to 'group' particular notes together, while 'breaking off' into other patterns and sequences occasionally. These higher-order chains tend to generate results with a sense of structure, rather than the 'aimless wandering' produced by a first-order system.Markov chains can be used structurally, as in Xenakis's Analogique A and B. Markov chains are also used in systems which use a Markov model to react interactively to music input.Usually musical systems need to enforce specific control constraints on the finite-length sequences they generate, but control constraints are not compatible with Markov models, since they induce long-range dependencies that violate the Markov hypothesis of limited memory. In order to overcome this limitation, a new approach has been proposed. Baseball Markov chain models have been used in advanced baseball analysis since 1960, although their use is still rare.

Each half-inning of a baseball game fits the Markov chain state when the number of runners and outs are considered. During any at-bat, there are 24 possible combinations of number of outs and position of the runners. Mark Pankin shows that Markov chain models can be used to evaluate runs created for both individual players as well as a team.He also discusses various kinds of strategies and play conditions: how Markov chain models have been used to analyze statistics for game situations such as and and differences when playing on grass vs. Markov text generators Markov processes can also be used to given a sample document. Markov processes are used in a variety of recreational ' software (see, Jeff Harrison, and Academias ).Probabilistic forecasting Markov chains have been used for forecasting in several areas: for example, price trends, wind power, and solar irradiance. The Markov chain forecasting models utilize a variety of settings, from discretizing the time series, to hidden Markov models combined with wavelets, and the Markov chain mixture distribution model (MCM).

See also. Markov (1906) 'Rasprostranenie zakona bol'shih chisel na velichiny, zavisyaschie drug ot druga'. Izvestiya Fiziko-matematicheskogo obschestva pri Kazanskom universitete, 2-ya seriya, tom 15, pp. 135–156. A. 'Extension of the limit theorems of probability theory to a sum of variables connected in a chain'. Reprinted in Appendix B of: R. Dynamic Probabilistic Systems, volume 1: Markov Chains.

Probability Markov Chains Queues And Simulation Pdf

John Wiley and Sons, 1971. Classical Text in Translation: Markov, A. Translated by Link, David. Science in Context. 19 (4): 591–600. Leo Breiman (1992) 1968 Probability. Original edition published by Addison-Wesley; reprinted.

(See Chapter 7). (1953) Stochastic Processes. New York: John Wiley and Sons. S. Tweedie (1993) Markov Chains and Stochastic Stability. London: Springer-Verlag.

Second edition to appear, Cambridge University Press, 2009. S. Control Techniques for Complex Networks. Cambridge University Press, 2007. Appendix contains abridged Meyn & Tweedie. Online: Extensive, wide-ranging book meant for specialists, written for both theoretical computer scientists as well as electrical engineers. With detailed explanations of state minimization techniques, FSMs, Turing machines, Markov processes, and undecidability.

Excellent treatment of Markov processes pp. 449ff. Discusses Z-transforms, D transforms in their context.

Kemeny, John G.; Hazleton Mirkil; J. Laurie Snell; Gerald L. Thompson (1959). Finite Mathematical Structures (1st ed.). Englewood Cliffs, NJ: Prentice-Hall, Inc. Library of Congress Card Catalog Number 59-12841.

Classical text. Cf Chapter 6 Finite Markov Chains pp. 384ff. & (1960) Finite Markov Chains, D. Van Nostrand Company. E. 'General irreducible Markov chains and non-negative operators'. Cambridge University Press, 1984, 2004.

Seneta, E. Non-negative matrices and Markov chains. Ed., 1981, XVI, 288 p., Softcover Springer Series in Statistics. (Originally published by Allen & Unwin Ltd., London, 1973). Kishor S.

Trivedi, Probability and Statistics with Reliability, Queueing, and Computer Science Applications, John Wiley & Sons, Inc. New York, 2002. K. Trivedi and R.A.Sahner, SHARPE at the age of twenty-two, vol.

4, pp. 52–57, ACM SIGMETRICS Performance Evaluation Review, 2009. R. Trivedi and A. Puliafito, Performance and reliability analysis of computer systems: an example-based approach using the SHARPE software package, Kluwer Academic Publishers, 1996. G.

Probability Markov Chains Queues And Simulation Ebookers 1

De Meer and K. Trivedi, Queueing Networks and Markov Chains, John Wiley, 2nd edition, 2006.External links.